Adopting a new technology can feel like a journey – exciting, challenging, and sometimes a bit daunting. History is full of lessons about what happens when businesses handle this journey well (or poorly).

Companies that embrace innovation strategically often gain a competitive edge. Let’s walk through a typical lifecycle of business technology adoption, from the first spark of learning about a new tool all the way to making final adjustments once it’s in place. Along the way, we’ll highlight common challenges at each phase and offer tips to overcome them, mixing real-world examples with practical insights.

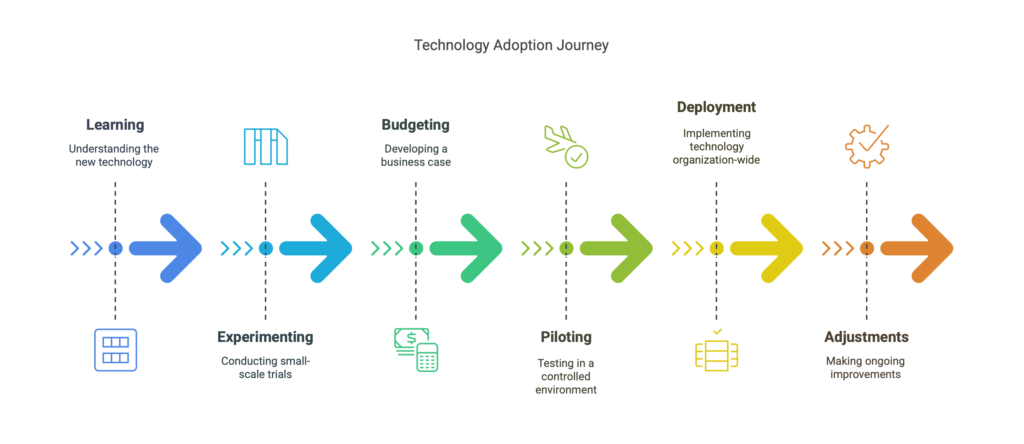

Whether you’re a manager considering the latest software or a small business owner eyeing a cutting-edge gadget, understanding these phases will help you plan better and avoid pitfalls. Think of it like a roadmap: Learning about the tech, Experimenting with small tests, Budgeting for the investment, Piloting on a limited scale, full Deployment, and continuous Adjustments. Let’s dive into each stage of this journey.

Learning: Becoming Aware of New Technology

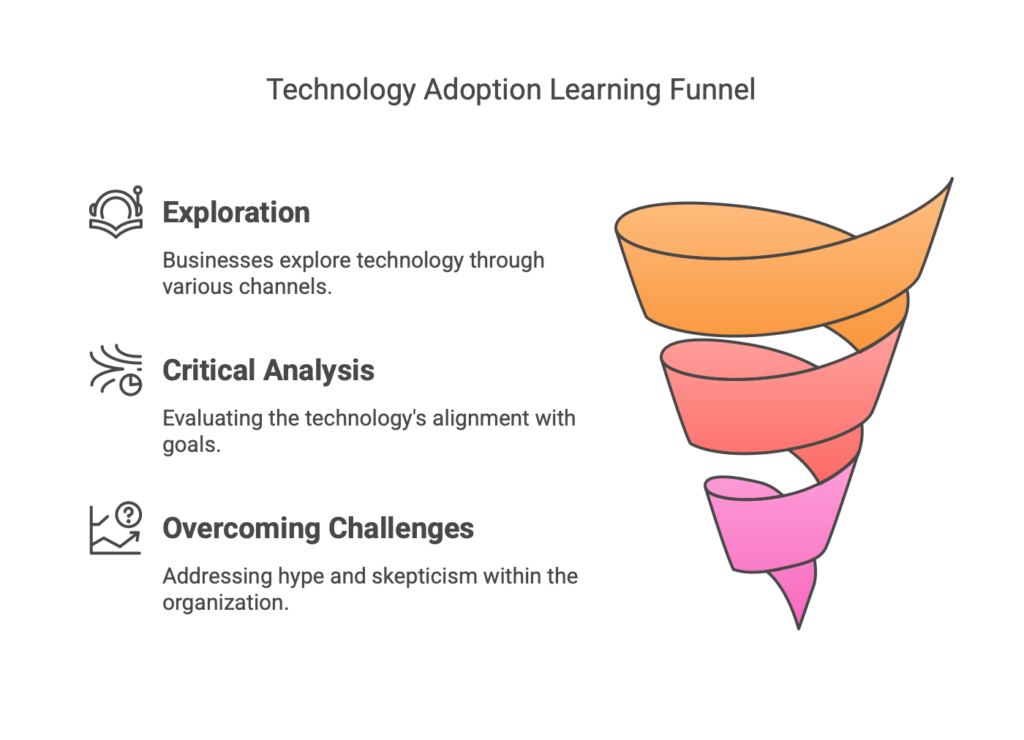

Many new tech adoption journeys begin with someone asking, “Have you heard about this new ___?” In the learning phase, a business becomes aware of an emerging technology and investigates what it is and how it might be useful. This is the awareness and understanding stage where you gather information and start imagining the possibilities. Done right, this phase lays the groundwork for smart decisions later.

Companies often boost their awareness through exploration: reading industry publications, attending conferences or webinars, watching demos, and networking with industry experts. For example, a retail executive might learn about a new inventory management system at a trade show, or an IT manager hears about a promising cybersecurity tool from an online tech forum. It’s not just about getting excited by the buzz, though – it’s also about critical analysis. Businesses will typically ask, “Does this technology solve a problem we have or align with our goals?” Ensuring a new tech actually fits your strategy is key during this phase. Essentially, you’re sizing up the tech’s potential value to your organization before any commitment.

Real-World Example: Imagine a mid-size clothing retailer in 2010 hearing about RFID tagging (the tech used to track inventory with small radio tags). In this learning phase, their team reads case studies of how giants like Walmart use RFID to reduce stock losses. They attend an industry workshop to see RFID demos and ask questions. By the end, they understand the basics – RFID tags go on products and can be scanned wirelessly – and they identify that it could help them manage inventory across their stores more efficiently. This awareness doesn’t mean they’re jumping in yet, but the seed is planted and they’re considering if it suits their business needs.

Challenges & Solutions at the Learning Stage:

- Cutting Through the Hype: Every new tech is often touted as “the next big thing,” which can lead to information overload or unrealistic expectations. It’s easy to be overwhelmed by buzzwords and sales pitches. Solution: Focus on credible, independent sources. Read expert analyses or unbiased reviews rather than just vendor marketing. If possible, talk to peers or other businesses who have experience with the technology. By grounding your learning in real use-cases and data, you can separate genuine potential from the hype.

- Internal Skepticism: Within your company, not everyone will be immediately thrilled about a new technology. Some team members might think, “We’ve always done fine without it, why change now?” or worry it’s just a fad. Solution: Encourage an open-minded culture. Share what you learn – for instance, present a quick summary at a team meeting or circulate an interesting article about the tech’s benefits. Highlight how the technology could address existing pain points or improve things everyone cares about (e.g. “This software could save us hours of manual data entry each week”). Early education and involvement turn skepticism into healthy curiosity.

Experimenting: Testing the Waters with Small Trials

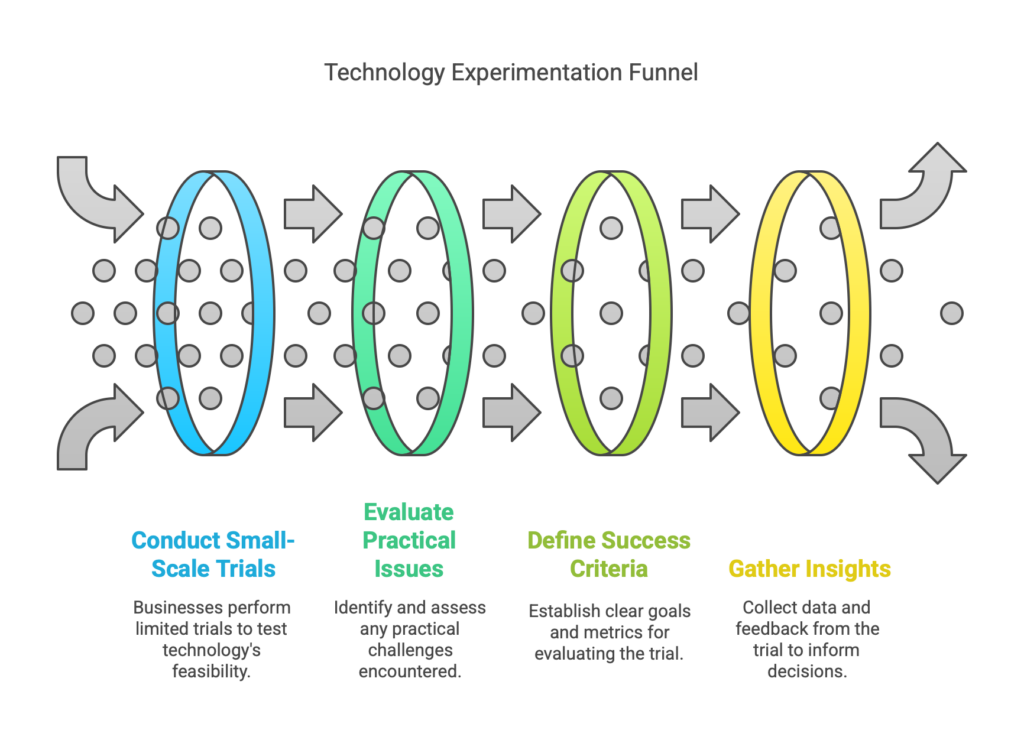

After building some awareness and interest, the next phase is experimenting – essentially “testing the waters”. This is where a business takes that new technology for a low-risk trial run to see it in action. Instead of a full commitment, you do a small-scale experiment or proof-of-concept. The goal here is to answer some key questions: Will this actually work for us? What are the practical issues?

Experimentation can take many forms. You might set up the new software on a single computer or run a free trial version, use the device in one team for a week, or create a quick prototype integrating the tech. It’s often informal and limited in scope. For example, a restaurant that learned about a new online ordering system might try it out at one location on a slow afternoon to see how it handles real orders. Or a marketing team intrigued by a new AI analytics tool might feed it a small set of data to test its insights. The idea is to get hands-on experience without significant cost or risk.

Real-World Example: Think of a regional bank considering biometric authentication (like fingerprint logins) for customer accounts. In the learning phase, they became aware of its security benefits. Now in experimenting, the bank’s IT department sets up a small pilot on internal systems – perhaps employees in one office use fingerprint scanners to log in to their computers for a month. This small experiment helps the bank observe any technical hitches (Does the scanner work reliably? How do users feel about it? What about people with faint fingerprints?), all on a tiny scale. Maybe they even run the new system alongside the old one to compare. The outcome of this experiment – say, finding that 95% of logins work great but some scanners had driver issues – will inform whether and how they proceed.

Challenges & Solutions at the Experimenting Stage:

- Limited Know-How: Early experiments can stumble if your team isn’t familiar with the new tech. You might set things up incorrectly or misinterpret the results simply because it’s new territory. Solution: Involve your tech-savvy staff or seek guidance from a technology partner. Some vendors will even support trials by answering questions or helping with setup – don’t hesitate to use those resources. The experimenting phase is a learning opportunity for your team, so expect a learning curve and treat early hiccups as lessons rather than failures.

- Unclear Success Criteria: It’s hard to call a small trial a success or failure if you didn’t define what you were looking for. Solution: Before you start the test, decide on a couple of specific outcomes or questions you want answered. For example, “Can this inventory app sync data between store and warehouse in real-time?” or “Does the new process save at least 10% of time compared to our current method?” Having clear goals will help you evaluate the experiment’s results objectively. Also, try to simulate real-world conditions in your test as much as possible. Use real data or real tasks in that limited setting so that the experiment truly reflects how the technology would perform in practice. And remember, this phase is for insight – even if an experiment exposes flaws or doesn’t wow you immediately, that information is extremely valuable for deciding next steps.

Budgeting: Making the Business Case and Securing Funding

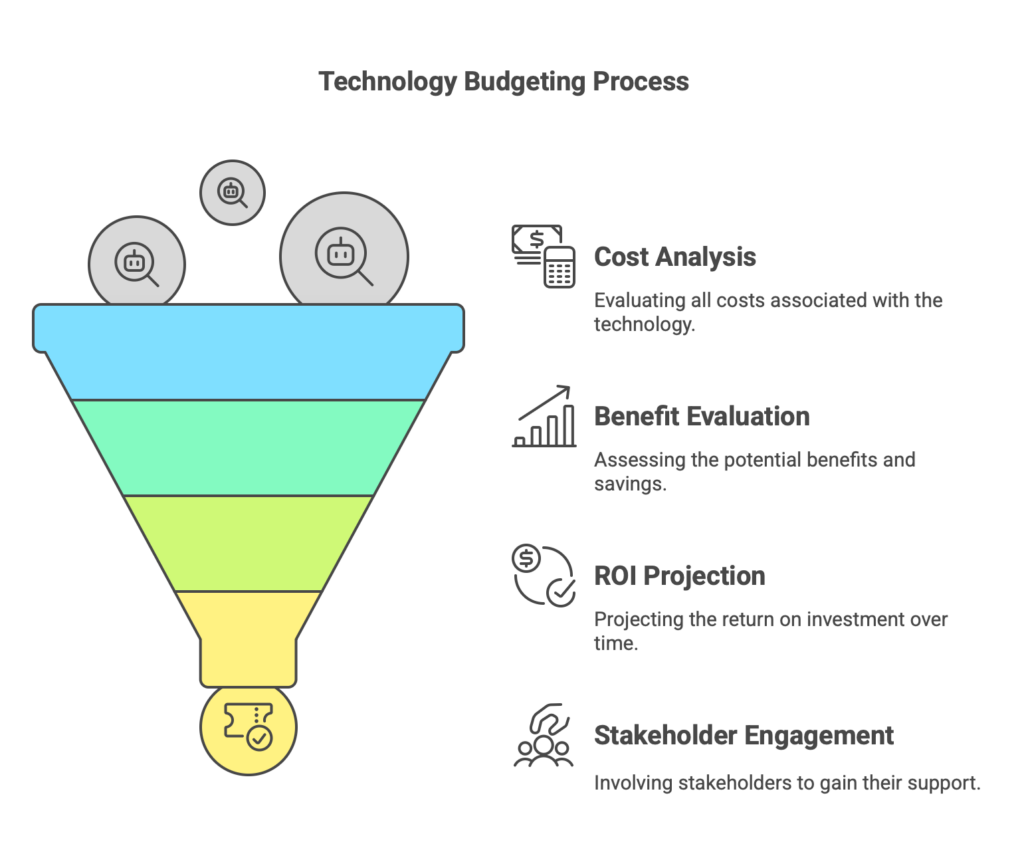

Once initial experiments show promise and everyone’s interest is piqued, reality sets in: how do we pay for this? The budgeting phase is where a business digs into the financial side of adopting the new technology. It’s time to justify the investment, calculate costs vs. benefits, and secure the necessary approvals (often from senior management or finance teams). In other words, this is where great idea meets spreadsheet.

Budgeting for a new technology isn’t just about the upfront price tag of buying a tool or service. Businesses must consider all the costs – from implementation services, new hardware, or licensing fees to training employees and potential downtime during the transition. For example, purchasing a new manufacturing robot isn’t only the cost of the robot; you must budget for installation, worker training, maintenance, and maybe upgrades to your electrical systems. It’s like buying a car: you factor in not just the sticker price, but insurance, gas, and upkeep. Similarly, if a company tested a new project management software in the experimenting phase and wants to roll it out, they’ll budget for the software subscription, time to migrate all projects into it, and even the hours spent training the team on how to use it effectively.

A strong business case is crucial here. This usually means translating what was learned in the experiment into business metrics. If the small trial suggested the technology can save 10 hours of work a week, what does that mean in annual dollars saved? If it can speed up delivery to customers, how might that boost sales or customer satisfaction scores? Often, you’ll perform a cost-benefit analysis or even project the ROI (Return on Investment) over a few years. For instance, “We invest $50k in this system, but it should save $20k in labor costs annually and increase revenue by $30k a year – paying for itself in under 2 years.” Those are the kinds of arguments that convince budget holders.

It’s worth noting that sticker shock can be a major barrier. According to a YouGov survey, 57% of business decision-makers say cost is the primary factor when considering new technology investments. Price often looms larger than concerns about time or complexity. That means if the tech seems too expensive, even if it’s beneficial, the proposal might get shelved. However, focusing only on the upfront cost can be a mistake – what matters is the value it delivers over time. Part of budgeting is educating stakeholders on the difference between price and value.

Real-World Example: Consider a mid-size insurance company that experimented with a new AI-based customer service chatbot. The pilot showed that the chatbot could handle 30% of routine customer inquiries. Now, for budgeting, the team calculates how much adopting this AI system will cost versus what it will save. They find the chatbot software license costs $100,000 per year. That sounds high, but when they crunch the numbers, they see it could reduce the need for five additional call center staff, saving about $250,000 a year in salaries – and improve response time for customers. They also budget an extra $20,000 for training their staff to work alongside the chatbot and for a part-time AI specialist to maintain it. They prepare a report with these figures and also note intangible benefits like “improved customer satisfaction” which could lead to more business. With this info, they approach the CFO and other executives for approval, armed with a clear story: “This is what it costs, this is what we gain.”

Challenges & Solutions at the Budgeting Stage:

- Cost Justification: One of the toughest challenges is convincing decision-makers that the new technology is worth the money. As noted, a majority of leaders focus heavily on the price, it’s often the first thing you’ll be asked about. Solution: Build a compelling business case in financial terms. Translate the tech’s benefits into dollars and cents. If it improves efficiency, estimate how much salary time that saves. If it could increase sales, forecast the revenue uplift. Use data from your experimenting phase to back these claims (e.g., “Our 1-month test showed 15% faster processing; over a year that equals X more orders fulfilled.”). Also, emphasize long-term savings or cost avoidance, not just immediate costs. Sometimes a tech has a high upfront cost but saves a lot over time – make sure to tell that story. Presenting a clear ROI projection or payback period can turn a “nice-to-have” into a no-brainer.

- Getting Stakeholder Buy-In: Budget decisions often involve multiple stakeholders – department heads, finance officers, maybe an IT steering committee. Each might have different concerns (security, compatibility, opportunity cost of spending money elsewhere). Solution: Engage stakeholders early with the information that matters to them. For example, ensure the IT team is on board and can vouch that the tech won’t require unforeseen extra costs (like huge infrastructure changes). Get a respected manager or executive sponsor excited about the project so they can champion it during budget meetings. Sometimes piloting in one department and having that manager advocate for it can be persuasive. Additionally, consider proposing a phased investment: instead of asking for a huge sum all at once, break the adoption into stages (pilot, first rollout, then expansion) with budget checkpoints. This can make the investment seem more manageable and lower risk, increasing the chance of approval.

Piloting: Running a Limited-Scale Implementation

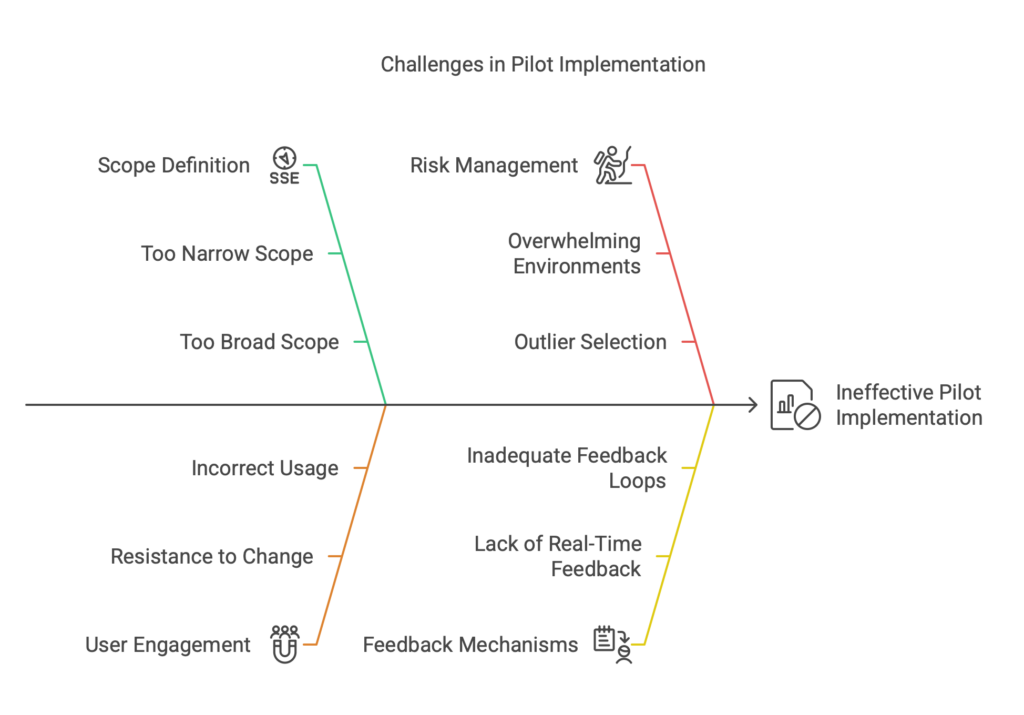

With budget in hand (or at least tentative approval), the next step is often a pilot program. Piloting is about testing the technology in a controlled, real-world scenario before full deployment. Think of it as a dress rehearsal. You’re beyond the small experiment on a few items – now you’re implementing the tech in a limited but actual business setting: maybe one department, one store, or one production line, depending on what makes sense. The goal is to validate that the technology truly works in your environment at scale and to uncover any issues or adjustments needed before you roll it out everywhere. It’s the bridge between theory and full adoption.

During the pilot, the company is essentially saying, “Let’s try this for real, but safely.” For example, if a hospital wants to adopt a new electronic health record system, it might pilot it in just the radiology department first, rather than flipping the switch for the entire hospital at once. Or a retail chain that budgeted for a new point-of-sale system might first pilot the system in three stores for three months. That way, if something goes wrong or if staff need more training, the impact is contained. Pilots are usually designed with specific success criteria and timeframes. You’ll decide upfront things like: How long will the pilot run? What metrics will we measure to decide if it’s successful (e.g., system uptime, user satisfaction scores, error rates, etc.)? And importantly, what does “success” or “failure” look like?

It’s also a phase for gathering feedback and making tweaks. By the end of a pilot, you should have a trove of real-life data and user opinions. Maybe you learn that the software works well except one module that nobody ended up using. Or employees in the pilot store found a clever workaround for a process – which signals a design change needed before broader rollout. Piloting is as much about organizational learning as it is about technology. In fact, two key questions typically get answered during a pilot: “Is implementing the technology feasible at full scale?” and “What practical challenges or surprises come up when we put it in action?”. The answers inform your next move.

Real-World Example: Let’s take the insurance company implementing an AI chatbot. Suppose they got budget approval to proceed. Instead of deploying the chatbot to every customer service rep and on every channel at once, they run a 3-month pilot. They select one customer service team (say, 10 agents) and integrate the chatbot into just one channel, like the company’s website chat. During this pilot, the chatbot handles customer queries on the website, and the 10 agents work with it, taking over when the bot can’t help. The company tracks metrics: How many inquiries does the bot resolve? How many get passed to a human? Are customers satisfied with the answers? They also gather feedback: agents might note, “The bot struggles with insurance policy number recognition”, or customers might say, “I got stuck in a loop with the bot.” All this info is gold because it highlights what to improve. By the end of the pilot, maybe they find the bot can handle 25% of inquiries fully, but they need to tweak its scripts for certain complex questions. Armed with these insights, they can confidently move to deploy the chatbot more broadly, knowing its real-world performance and limitations.

Challenges & Solutions at the Piloting Stage:

- Defining the Right Scope: One common challenge is getting the pilot’s scope just right. If the pilot is too narrow or short, you might not see the full picture; too broad, and you risk big impacts if things go awry. Solution: Clearly define the pilot boundaries and keep it representative. Choose a test group or location that reflects the diversity of your business, but limit the size to contain risk. For instance, don’t pick your most chaotic, busiest store for a pilot (it might overwhelm the new system), but also don’t pick an outlier that’s nothing like the rest of your operations. Set a reasonable timeline – enough to encounter day-to-day variations, but not so long that the team loses momentum. And importantly, decide what success metrics you’ll evaluate. Write down a few specific goals (e.g., “System uptime must be 99%+ during pilot”, “Order processing time should improve by at least 15% in pilot stores”). This way, when the pilot ends, you have objective criteria to help decide whether to go full scale or address more issues first.

- User Buy-In and Participation: A pilot involves real people using the new tech, and their engagement is crucial. If the pilot users resist the change or use the system incorrectly, the pilot could give misleading results. Solution: Pick the right participants and support them. Ideally, involve a team that’s open to change or even excited to try something new (maybe those who have been asking for a better solution!). Provide training and make sure everyone knows this is a pilot – encourage them to fully use the new tech, but also to note any problems. Establish a feedback loop: perhaps weekly check-ins or a shared document where users can log issues and suggestions. For example, during a CRM software pilot, sales reps might report “It takes 3 extra clicks to log a call compared to our old system.” That’s exactly the kind of feedback you need to refine the setup. When users feel heard and supported, they’re more likely to engage sincerely with the pilot. This real-time feedback and engagement will help you make informed, data-driven adjustments, increasing the chances that the technology will be adopted smoothly when you roll it out company-wide.

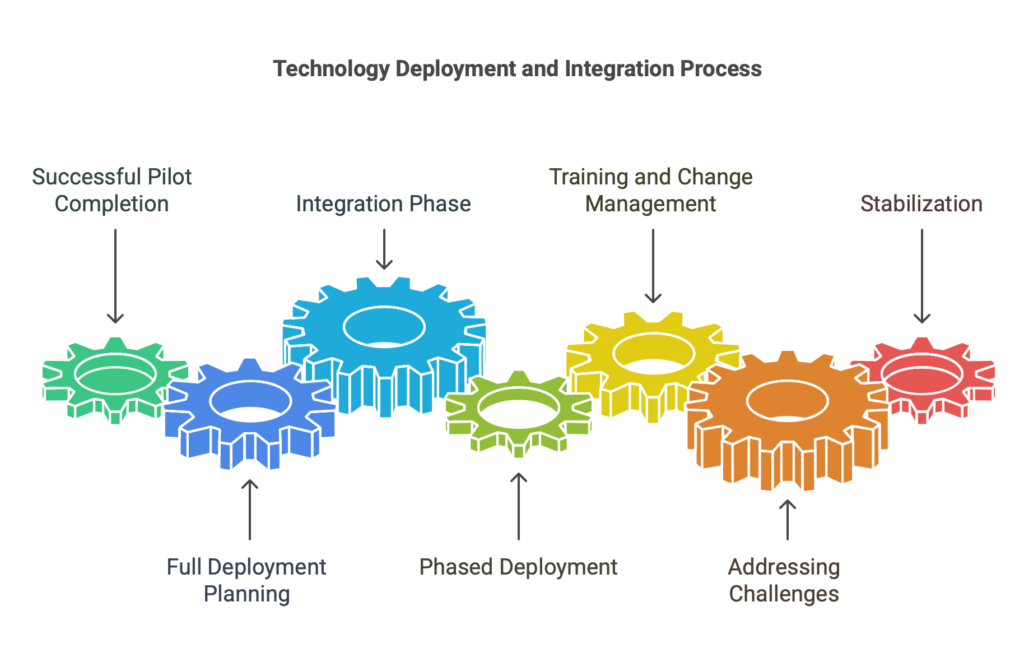

Deployment: Full-Scale Implementation and Integration

After a successful pilot, it’s time for the main event: full deployment of the new technology. Deployment is where the technology is rolled out to all intended users or areas of the business and becomes an official part of your operations. In this phase, the project shifts from a controlled trial to the real deal – “flipping the switch” so that everyone uses the new system, or the new devices are installed everywhere, etc. It’s both exciting (finally, the big benefits should start coming!) and challenging, because scaling up can reveal new hurdles. Essentially, this is where potential turns into reality and strategy translates into action.

A key focus during deployment is integration. You’re not just adding something new in isolation; you’re weaving it into the existing fabric of your business. All those current systems, processes, and people have to work smoothly with the new tech. Think of it like adding a new piece to a puzzle – it has to fit with all the other pieces around it. This might involve technical integration (connecting the new software with your database, or ensuring the new machine works on the factory floor without disrupting other machines) as well as process integration (updating workflows, checklists, or protocols to include the new tool). The aim is a cohesive setup where old and new cooperate effectively, maintaining business continuity even as you change things up.

Deployment often happens in stages, especially in larger organizations, to manage risk. For example, a retail chain might deploy a new POS system region by region rather than all stores on the same day, or a company might do a phased rollout where one department goes live each week. This phased approach allows the project team to handle any issues in waves and apply lessons learned from early phases to later ones. In smaller businesses, deployment might be a “big bang” (all at once) if the scope is manageable – for instance, a 20-person company switching everyone to a new email system over a weekend. In any case, scheduling and coordination are crucial. You often pick a timing that minimizes disruption (like off-peak hours or quarter-end vs. mid-quarter).

Another critical element of deployment is training and change management. You want everyone on board and ready to use the technology correctly. Even if people were involved in the pilot, the broader group will need support. This could mean conducting training sessions, creating user guides or cheat sheets, and having IT or project team members on standby to answer questions or troubleshoot during the rollout period. Remember, the new technology might change how people do their jobs day-to-day, and that can be stressful. Clear communication about why the change is happening and how it benefits the team helps a lot. Celebrating the deployment as a positive milestone (“We’re modernizing to make work easier and serve customers better!”) can also boost morale and acceptance.

Real-World Example: Think of a manufacturing firm deploying a new enterprise resource planning (ERP) system (a classic large-scale software that affects multiple departments). After piloting in the finance department, they plan the full deployment across accounting, procurement, and inventory teams. They schedule the go-live at the start of a new fiscal quarter (to avoid disrupting end-of-quarter reporting). For integration, the IT team ensures the new ERP is properly connected to the existing sales system and the production scheduling software. They migrate data over the weekend – all supplier info, invoices, inventory records move into the new system. Come Monday, everyone is using the ERP for real. The company had set up a “war room” with experts from the vendor and their own IT staff ready to quickly solve any issues that arise (for example, if someone can’t find a report or if there’s a glitch in posting a purchase order). They also scheduled refresher training sessions that week for each team, so users can ask questions now that they’re hands-on. Despite a few hiccups (some inventory data didn’t migrate correctly and had to be fixed, and a few people needed help navigating the new screens), the business continues to run during the transition. Over a couple of weeks, things stabilize and the new ERP becomes the new normal, with old systems turned off. This full deployment was a massive effort, but careful planning (and maybe a few late nights by the IT team) ensured minimal downtime and disruption.

Challenges & Solutions at the Deployment Stage:

- Change Resistance and Training Gaps: No matter how well a pilot went, when you roll out to everyone, you’ll likely face some resistance. People get comfortable with old ways, and a new system can provoke anxiety or pushback like “Why do we have to do this? It was fine before.” Additionally, not everyone learns the new tool at the same pace – some may struggle initially. Solution: Proactive change management. Communicate early and often about the reasons for the change and the benefits it will bring. Highlight success from the pilot (“Team X was able to increase sales by 5% with the new system, and it saved them 10 hours a week in admin work”). Invest in comprehensive training – possibly tiered for basic users vs. power users – and provide multiple ways to learn (workshops, online tutorials, one-on-one help). Identify a few “champions” or super-users (perhaps those involved in the pilot or especially enthusiastic employees) in each department who can help their colleagues on the ground. During the initial launch, beef up support: have an IT hotline ready, or floor walkers who can quickly assist. When employees see quick help available and realize the company is serious about supporting them through the change, they tend to adapt faster and with less frustration. Celebrate quick wins and improvements to reinforce the positives of the new tech.

- Technical Issues and Integration Pains: Scaling up can reveal technical challenges that weren’t obvious in a smaller pilot. Perhaps the volume of data is much higher, or unexpected interactions with legacy systems occur. The last thing you want is major business interruptions due to deployment issues. Solution: Careful planning and phased integration. If possible, deploy in stages and closely monitor each stage. This way, if an issue pops up (say, the payment system integration fails in one region), you can fix it before affecting all regions. It’s often wise to run the old and new systems in parallel for a short time, if feasible, as a safety net. Also, ensure you have backups of data and a rollback plan: know how you’d revert to the old system temporarily if something truly critical goes wrong. Prioritize maintaining business continuity – for example, multi-location companies upgrading network infrastructure often schedule changes in ways that avoid any downtime during business hours. Testing is your best friend here: test the full deployment in a controlled setting (sometimes called a “dry run” or staging environment) with a volume similar to real usage. And don’t underestimate the human integration aspect – for instance, coordinating between teams. A new tool might mean the marketing team’s work now triggers an alert to the sales team in the system; all such cross-team touchpoints should be smoothed out with clear processes. With thorough testing, phased rollout, and strong integration planning, you can significantly reduce the technical hiccups of deployment and ensure the new technology slots into your business without breaking things. As one set of best practices puts it, efficient deployment is about harmonizing the new technology with existing systems for a smooth transition.

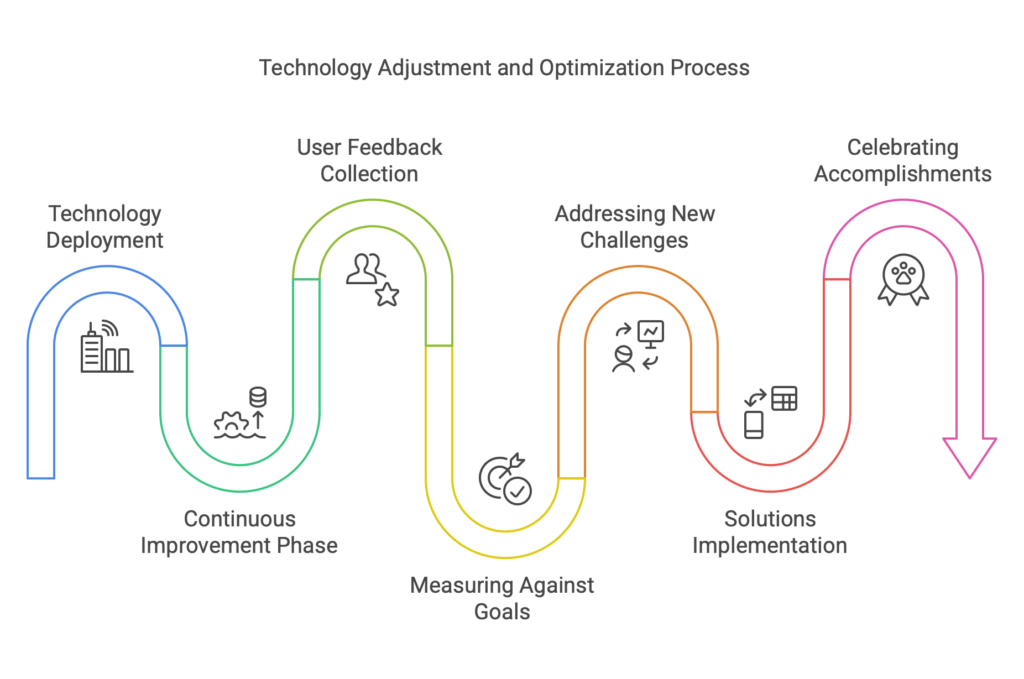

Adjustments: Refining and Optimizing After Go-Live

Congratulations – the new technology is up and running across your business! However, the journey isn’t quite over. The final phase, which we’ll call adjustments (or sometimes optimization), is all about fine-tuning the technology and the related processes now that you’re using it in the real world. Think of this stage as “continuous improvement”. You’ve made a big change; now you ensure you’re getting the maximum benefit from it and address any issues that linger or new needs that arise. In a sense, this phase never truly ends, because a good business will keep optimizing and tweaking its tools as long as they’re in use.

In the adjustments phase, the technology has become an established part of your daily operations, so the focus shifts to refining and maximizing its potential. It’s like you’ve planted a fruit tree – deployment was planting it in your garden, and now you want to nurture it so it bears lots of fruit year after year. That means careful observation of how it’s performing and strategic tweaks to get the best out of it. For software, this could involve customizing settings, adding new modules or features that you initially held off on, or reconfiguring workflows now that users are more comfortable. For a physical technology or equipment, it might involve adjusting the operational parameters, doing preventative maintenance, or training staff in advanced techniques to use it. Often, during this phase, businesses discover previously unexplored features or use-cases of the tech that can add extra value once the basics are running smoothly.

User feedback is especially valuable during adjustments. Now that your wider team has been using the tech for a while, they’ll have opinions: what they love, what annoys them, where they feel efficiency could improve. Maybe the sales team says, “The new CRM is great, but if we could automate this report, it would save us another hour each week.” Or a machine operator might suggest a tweak to the settings to reduce vibration and wear. Collecting this feedback through surveys, meetings, or an ongoing channel (like an internal forum or support ticket system) is important. It directly points to areas of improvement. Some companies formalize this by establishing a post-implementation review a few weeks or months after deployment, where they gather stakeholders to discuss what’s working and what’s not. The outcome of such reviews is a punch list of adjustments to make.

Another aspect of this phase is measuring against the goals you set out originally. Remember those success criteria and ROI projections from budgeting and pilot? Now’s the time to see if reality matches expectations. Are you actually seeing the 15% efficiency gain you predicted? If not, why? Maybe people need more training, or maybe an assumption was off and needs rethinking. If you’re exceeding the goal, fantastic – maybe there’s an opportunity to push it even further or to capitalize on the success (like reallocating saved budget or headcount to other projects).

Crucially, adjustments also involve addressing any new challenges that have emerged. No matter how smooth the deployment, using a technology extensively can reveal things no one predicted. Perhaps there are workflow bottlenecks: the new system made one part of a process so fast that another part became the slow point. Or maybe you encounter change in the tech itself: vendors release updates, or new integrations become available that could help you. Staying on top of these and adapting is part of this stage. It’s all about not settling for “we implemented it, job done,” but rather continuously improving how the technology is utilized. Many businesses adopt a mindset of continuous improvement or even have ongoing “centers of excellence” for major tech platforms to keep driving enhancements.

Real-World Example: A few months after deploying that new ERP system at the manufacturing firm, the project team reconvenes for a post-mortem (post-implementation review). They gather feedback from different departments. They learn that most goals were met – inventory accuracy is up, monthly financial closing is faster – but users have identified a few pain points. The procurement team finds the new system’s approval workflow for purchase orders to be slower than the old method, because it requires more fields to fill out. The IT team also notices that while most people are using the system, a few shadow spreadsheets are still floating around for certain reports (a sign some needs aren’t met by the ERP’s standard reports). Armed with this info, the company makes adjustments: the ERP administrator streamlines the purchase order form and possibly automates part of it. They also design a couple of new custom reports in the ERP to replace those rogue spreadsheets, so everyone can get what they need from the official system. Additionally, seeing how valuable the ERP data has become, the company decides to upgrade their license to unlock an analytics module that was not included initially – this way managers can get better insights. These tweaks and upgrades ensure the ERP delivers even more value and fits the business like a glove. Over time, as the business changes (new products, maybe an acquisition of another company), they’ll continue to adjust the ERP configurations, add users, and maybe integrate additional software tools into it. The technology stays effective and aligned with the business through these continuous improvements.

Challenges & Solutions at the Adjustments Stage:

- Complacency (The “Set and Forget” Trap): After a big deployment, there’s often a tendency to breathe a sigh of relief and move on to the next project. The risk is that you stop paying attention to the new system and assume all is well, potentially missing out on optimizations or not noticing emerging issues. Solution: Treat the adoption as ongoing. Schedule a formal check-in (or a few) after go-live – maybe at 30 days, 90 days, 6 months – to evaluate how things are going. Continue to measure the key metrics and see if the trend is improving or if it’s plateaued. If benefits aren’t materializing as expected, dig into why and address it. Maintain a small team or at least an accountable person (like a system owner or product manager for the tech) to continuously watch over the technology’s performance and champion improvements. By keeping the focus, you ensure the tech continues to deliver value and evolves with your needs.

- Optimizing to Maximize Value: Sometimes the technology can do so much more than what you initially used it for. Or perhaps your business changes soon after deployment, and you need to adapt the tool. Solution: Continuous training and exploration. Encourage power users to learn advanced features and share tips with others. Maybe host a refresher or “advanced tips” session after a few months, once everyone has the basics down – people will get a lot more out of such training after using the system for a while and knowing where their pain points are. Also, keep an eye on updates from the technology provider: new features or improvements might roll out that you can take advantage of. Don’t hesitate to customize or reconfigure things now that you have real usage data. For example, if reports are taking too long to generate at end of month, perhaps you need to archive old data or upgrade your server – relatively small tweaks that make a big difference in performance. Essentially, fine-tune workflows and settings to align with how your team actually works. Many tools allow a lot of flexibility (new templates, automation rules, etc.); leveraging these can boost efficiency beyond the initial setup. Think of the adjustment phase as cultivation: you’re helping the technology grow into the perfect tool for your organization.

Finally, celebrate the accomplishment. By reaching the adjustments stage, your business has successfully navigated the adoption journey. Give kudos to the team that managed the change, and recognize employees for adapting. This creates a positive environment for the next technology adoption, because – let’s be honest – innovation never truly stops. In fact, after optimizing a technology, eventually newer solutions will appear, and the cycle begins anew. The key takeaway is that by handling each phase thoughtfully – from learning through experimenting, budgeting, piloting, deployment, and adjustments – you build a robust capability in your organization to adopt new technologies successfully over and over.

Conclusion

Adopting new technology is a journey, not a one-time event. We’ve traveled through the six key phases: Learning about the tech, Experimenting on a small scale, Budgeting and making a business case, Piloting in a controlled setting, full Deployment, and ongoing Adjustments. Each phase comes with its own challenges, from cutting through hype and securing buy-in to managing change and optimizing performance. By anticipating these challenges and applying best practices at each step, businesses can significantly increase the odds of a smooth and successful technology adoption.

A few closing tips: Always keep your end goals in sight – know why you’re adopting a technology and let that guide you through the tough moments. Involve your people at every stage – those who use the tech day-to-day often have the best insights on what’s needed. Don’t be afraid of setbacks in the early phases; it’s better to catch a flaw in a small experiment than during company-wide deployment. And when things do go live, support your teams and keep improving. The companies that thrive are usually those that learn and adapt continuously. In today’s fast-paced world, new technologies will keep emerging, and having a structured approach like this lifecycle makes your organization more agile and resilient.

Remember, the goal of adopting any new technology is ultimately to make your business better – more efficient, more competitive, more innovative, or all of the above. By following a lifecycle and embracing each phase with an open mind and proactive strategy, you can turn the daunting process of tech adoption into a series of manageable steps. In the end, you’ll not only have a new tool in your arsenal, but also a team that’s more experienced and confident in navigating change. And that capability is perhaps the most valuable technology of all.

Happy innovating!